TL;DR

Most protocol treasuries still pay for inputs: votes, posts, attendance.

Programs that matter fund outcomes: retention, market-share, fee growth, security.

To prove impact, always ask: compared to what? Use a control group or baseline to see if the change was real, and then check if it lasts once rewards end.

Optimize each mechanism for a clear North Star metric and benchmark against peers.

Treat incentive spend like a risk budget: set explicit return targets, enforce milestones, and shorten feedback loops.

At first glance, many incentive programs look successful. Dashboards show rising votes, bustling forums, and liquidity flowing in. But once the payouts end, the activity often fades just as quickly. What matters is not who showed up, but who stayed, and whether value compounded.

This piece makes the case for a paradigm shift: from rewarding participation to funding outcomes.

Short-term spikes can look impressive, but only persistence after rewards stop shows true impact.

The measurement gap

It’s easy to celebrate a spike in active wallets, TVL, or votes after a rewards program. Dashboards look impressive, but the real question is:

Did the incentives cause a lasting change?

Most programs stop at correlation. Real impact requires causality and persistence. That means:

Defining metrics up front.

Planning for a counterfactual.

Measuring outcomes after incentives end.

When we applied this framework to Arbitrum’s STIP, the picture became clearer. Using Causal Impact, we found:

+24% in transactions, +29% in unique users, +47% in value transferred during the program.

But activity dropped once rewards ended.

94% of incentivized users were “traders”, a cohort with low post-incentive stickiness.

The follow-up program, LTIPP, improved governance processes (with councils and advisors) and reporting quality, but the core lesson remained:

Without a counterfactual and persistence testing, treasuries risk funding spikes that quickly evaporate.

The cost of paying for inputs

Paying for inputs (votes cast, posts written, TVL parked) invites participants to optimize the meter instead of the mission.

That’s Goodhart’s Law at work: once a metric becomes a target, it stops measuring what you care about.

When treasuries pay just for showing up, they attract mercenary participants who disappear once rewards end.

When treasuries pay for inputs like attendance, post counts, or “participation scores” instead of outcomes, the result is predictable:

Metric manipulation. Reward quantity and you’ll get quantity, comment farming, box‑ticking, and spread‑thin liquidity.

Adverse selection. Input‑indexed payouts attract mercenary cohorts with low post‑incentive stickiness.

Signal dilution. Pay‑per‑action schemes flood forums and ballots with low‑signal activity, obscuring real demand and quality work.

Reversion risk. Spikes during the reward window mean‑revert when payouts stop; little of it compounds into retention, fees, or security.

Example

A DEX pays a flat APR on TVL. Farmers route capital to the easiest pools to farm; pseudo‑stables balloon while taker experience (execution, slippage) barely moves. When rewards sunset, liquidity migrates. Because payouts targeted capital parked, not market‑share‑adjusted flow or post‑incentive retention, the program bought attention, not durable volume.

The takeaway isn’t that incentives “don’t work,” but that measurement and allocation discipline are non‑negotiable.

Funding outcomes > rewarding activity

Moving from inputs to outcomes means designing around three principles:

Define the counterfactual and test persistence

Always ask “compared to what?” Before/after charts mislead. Use thresholds, randomized cohorts, non‑incentivized pools, or a synthetic control to build a credible baseline, then evaluate whether effects persist weeks after incentives end.Normalize and compare intelligently

Not all growth is equal. Blockworks Research highlights cases where programs generated only 0.07 USD of sequencer revenue per 1 USD of incentives. That is a red flag for redesign, not renewal.Aim the mechanism at your North Star

Incentives should map to compounding value.

Example: Gauntlet’s Uniswap program on Arbitrum optimized for TVL and volume ROI.

Results: 14.85M USD in market-share-adjusted daily TVL, and 689 USD in TVL per 1 USD of incentives. Even after incentives ended, 70 percent of pools showed durable volume lift.

Bottom line: clarity in mechanism design always beats spray and pray.

Evolve the operating model

Source: Gitcoin Forum. Illustrates how grant programs compound value over multiple funding rounds, moving from early fundraising toward deeper sensemaking, momentum, and innovation.

Protocols are moving away from one-off, blanket spending and adopting tighter, outcome-driven models.

dYdX committed 8M USD in milestone-based funding across three strategic buckets, with transparent reporting included.

Gitcoin shifted to a multi-mechanism mix: quadratic funding for early projects, retroactive rewards for proven impact, and onchain community oversight.

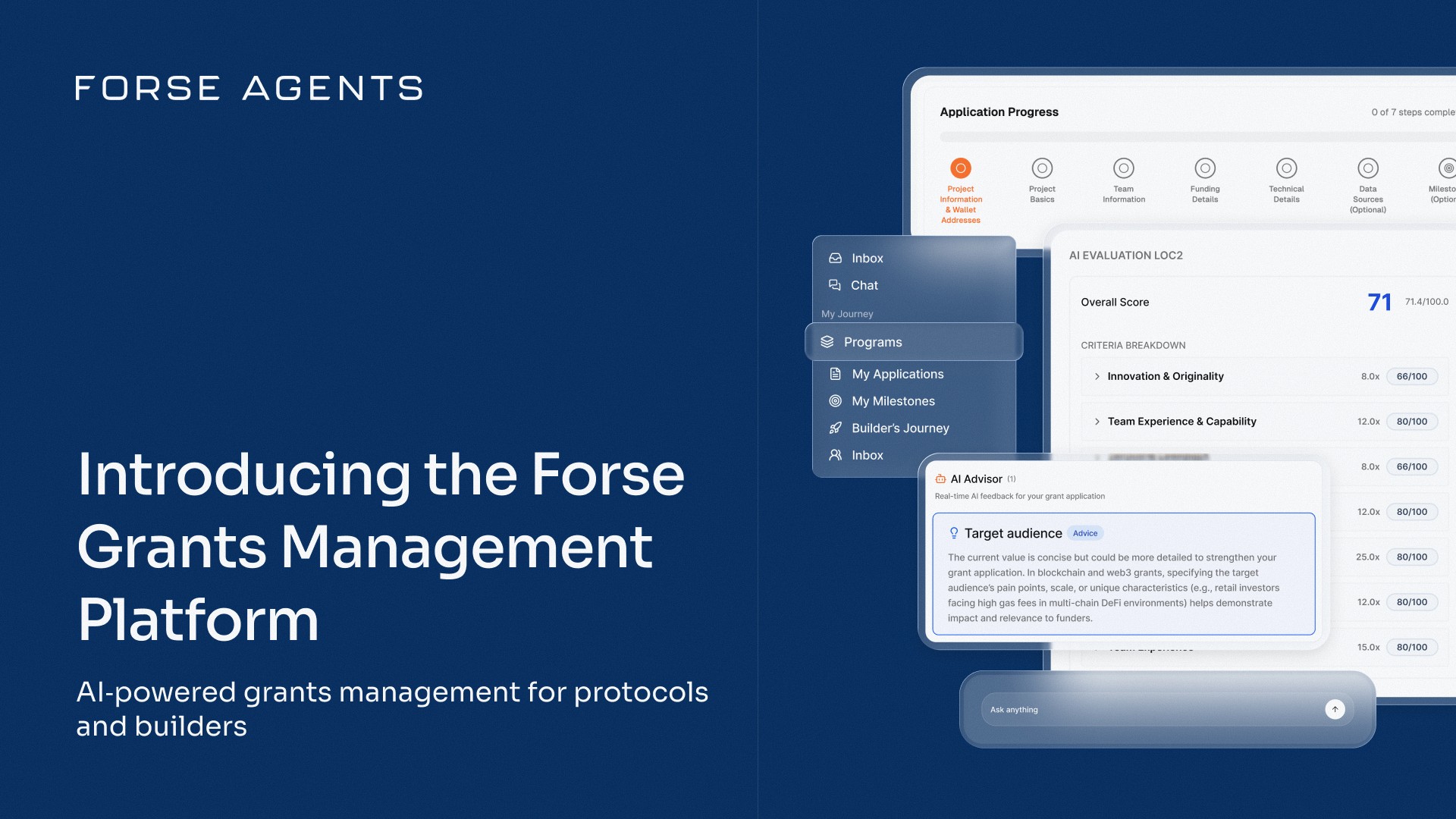

Forse is already helping teams apply this approach in practice:

In Polygon’s Community Grants Program, Forse is building an Impact Terminal to unify onchain and offchain reporting, benchmark grantee performance, and give the Grants Council real-time intelligence. This shifts grants from one-off disbursements to a milestone-gated, continuously monitored model.

Across these cases, the pattern is clear:

Define scope precisely.

Enforce milestones before funds move.

Build continuous feedback loops.

This is what the new operating model looks like.

What a proper outcomes dashboard reveals

Theory is one thing; practice is another. The real test of an incentive program comes when you open the dashboard and see not just how much activity you bought, but how much value endured.

That’s why Uniswap governance approved StableLab’s proposal to deliver an Incentive Analysis Terminal with Forse. The goal: give the community a transparent view of what its incentive program actually achieved.

Base retained long-term growth while other chains lost most of their TVL, proving that outcomes matter more than temporary inflows.

What the dashboard showed:

$13.6M in TVL and $75M in weekly volume during the program.

4,000+ participants claimed UNI incentives.

But only ~47% of TVL remained after 90 days.

And just ~4% of UNI rewards were still held—most were sold immediately on claim.

Pool-level benchmarking revealed important differences:

Stable pairs (e.g. USDC/USDT) attracted inflows quickly but suffered weak retention.

Pseudo-stables pulled liquidity without sustaining volume.

Volatile pairs (e.g. WETH/USDC) cost more upfront but proved far more resilient over time.

Chain maturity also mattered:

Base, a more established ecosystem, retained value better.

Scroll and Blast, still early-stage, saw liquidity evaporate once rewards ended.

This is the real value of an outcomes dashboard: it doesn’t just track spikes; it tells you where value sticks, and where it leaks. That is the information a treasury needs to design programs that fund outcomes, not just participation.

What must change

Protocols that want durable impact need to shift in three areas:

Mindset: Write an investment thesis for each program. Define the behavior you’re funding and the ROI threshold that justifies spend.

Design: Tie funding to milestones and retro rewards. Pay when impact is proven, not for showing up.

Governance hygiene: Bake in guardrails—cohort analysis, sybil checks, exit rules—so treasuries don’t get captured.

A solid incentive program needs clear objectives, KPIs, milestones, and kill criteria to avoid wasted spend.

Quick checklist before you launch a program:

What’s the North Star metric?

What’s the counterfactual baseline?

Which cohorts matter, and how will you test persistence?

What ROI is acceptable, and which milestones unlock funds?

What guardrails prevent mercenary farming or budget leakage?

These shifts turn incentives from “giveaways” into “structured capital allocation”, designed to deliver outcomes that last.

Close

After looking at dozens of programs, the lesson we keep coming back to is simple: what remains once the rewards stop is the only thing that really matters. It’s easy to get dazzled by dashboards and big spikes in activity, but we’ve seen firsthand how quickly those numbers can evaporate. The durable gains, the sticky users, the lasting liquidity, the compounding network effects are the real prize.

The examples outlined above prove the point: evidence beats vibes. Communities that adopt this standard will not only protect their treasuries but also deliver genuine impact, raising the bar for the entire ecosystem.

Share with your friends: