Key Takeaways

• STIP was more cost-effective in driving growth of top-line network metrics compared to other large initiatives, such as the ARB airdrop.

• User Retention remains a significant challenge with activity levels returning to pre-STIP figures, indicating the necessity of further refinement and innovation in how incentives are designed.

• STIP primarily attracted users that can be labeled as “Traders” (94% of the incentivized users), suggesting the need to adjust the program design in order to further attract and engage with other user segment types.

• During the application phase of STIP, community engagement was extremely skewed toward Yield Aggregators (18 applicants) and DEXs (17 applicants). However, community effort was concentrated in a handful of applicants, belonging to the DEXs (930 hours) and Perpetuals (590 hours) sectors, most likely due to the perceived relevance and renown of some applicants.

• The application phase changes introduced in LTIPP (creation of the advisor role and implementation of a council system) improved the quality and consistency of the feedback received by the applicants while reducing community effort. To be determined if this translates to more impactful incentive designs.

Introduction

As the race for L2 dominance picks up steam, newcomers and incumbents are deploying considerable amounts of capital and resources in an attempt to grow their network size, attract builders, and expand their user base. In this post, we summarize our recent analysis of the efficacy of the Short-Term Incentives Program (STIP) and compare it with its successor the Long-Term Incentives Pilot Program (LTIPP) at Arbitrum. By examining the impact of these programs on key metrics, user acquisition and retention, and governance participation, we aim to provide actionable insights to enhance the design and efficacy of future incentive initiatives.

STIP Analysis

Causal Impact on Network Metrics

To gauge the effectiveness of STIP, we conducted a Causal Impact Analysis, which revealed significant relative increases in top-line network metrics:

• Total Transactions: 24% increase

• Unique Users: 29% increase

• Total Value Transacted: 47% increase

For context, we compared these results to the relative impact of the ARB airdrop, which produced larger absolute effects but at a substantially higher cost. While the ARB airdrop increased total transactions by 42%, unique users by 85%, and total value transacted by 32%, it distributed 1.275B ARB tokens (±$1.72B USD) compared to STIP's 50M ARB tokens (±$40M USD). Of course, the goal of the ARB airdrop was not solely to drive the metrics mentioned above. These results suggest that STIP was more cost-effective in driving growth.

Fig. 1 - Impact Analysis - Total Transactions

Fig. 2 - Impact Analysis - Total Value Transacted

Fig. 3 - Impact Analysis - Unique Users

User Acquisition and Retention

STIP attracted a significant influx of new users, with approximately 30,000 new wallets created during the program. However, data suggests that the program may have been ineffective in retaining these users, as activity levels largely returned to pre-STIP figures once the incentive distribution ended.

Fig. 4 - User Acquisition vs Churn Analysis

Further analysis revealed that 94% of the incentivized users (those with >$20 USD in claimed rewards) were categorized as "Traders," compared to 43% of the average Arbitrum user. These "Trader" users also had the highest average acquisition cost at ~29 ARB tokens per user.

Fig. 5 - User Segmentation - All of Arbitrum

Application Process Analysis

During STIP’s application phase, the distribution of proposals was heavily skewed towards Yield Aggregators (18 applicants) and DEXs (17 applicants). However, when we look at the ‘Effort’ (measured by reading and writing times of each forum user) the community spent engaging with the program during the application phase, it is not evenly distributed. Effort concentrates mainly in the DEX (~930 hours) and Perpetuals (~590 hours) categories; with the remaining categories averaging between a few minutes and 110 hours of community effort.

Fig. 6 - User Segmentation - STIP Participants (Active)

Notably, most of this Effort was directed towards High-Value Posts, indicating meaningful contributions from the community. This suggests that while engagement was unevenly distributed, it was largely focused on providing substantive feedback and critique.

Fig. 7 - User Acquisition Cost by Segment

LTIPP Comparison

To assess the impact of the changes introduced in LTIPP, we compared the design differences between the two incentive programs in terms of governance operations and proposal feedback.

Overall our results can be summarized as follows:

• During STIP a handful of proposals received most of the attention while others were largely neglected

• The advisor role and council system in LTIPP led to more coherent and equal high-value feedback across applications (88.5% high-value posts in LTIPP vs 39.3% in STIP, p-value: 3.4e-61)

• Effort in the Arbitrum Governance Forum was significantly lower for LTIPP, likely due to the overall improved quality of submitted proposals (Average effort difference, p-value: 0.0024)

Our findings suggest that the structural changes implemented in LTIPP were effective in streamlining the application process, ensuring more consistent and valuable feedback, and reducing the burden on the community.

Recommendations and Final Thoughts

Based on our analysis, we recommend the following strategies for enhancing the impact of future incentive programs at Arbitrum:

Retention Mechanisms: Incorporate mechanisms that sustain user engagement beyond the initial incentive period to address the challenge of user retention.

Audience Diversification: Experiment with incentive designs that target user segments beyond "Traders" to optimize acquisition costs and promote a more diverse ecosystem.

Iterative Improvement: Leverage empirical data to continuously refine incentive frameworks, adopting successful elements from LTIPP, such as the advisor role and council system.

StableLab is dedicated to providing accurate analyses to support the Arbitrum community. By iterating incentive frameworks based on these insights, we aim to foster sustained growth and alignment with the Arbitrum community’s interests.

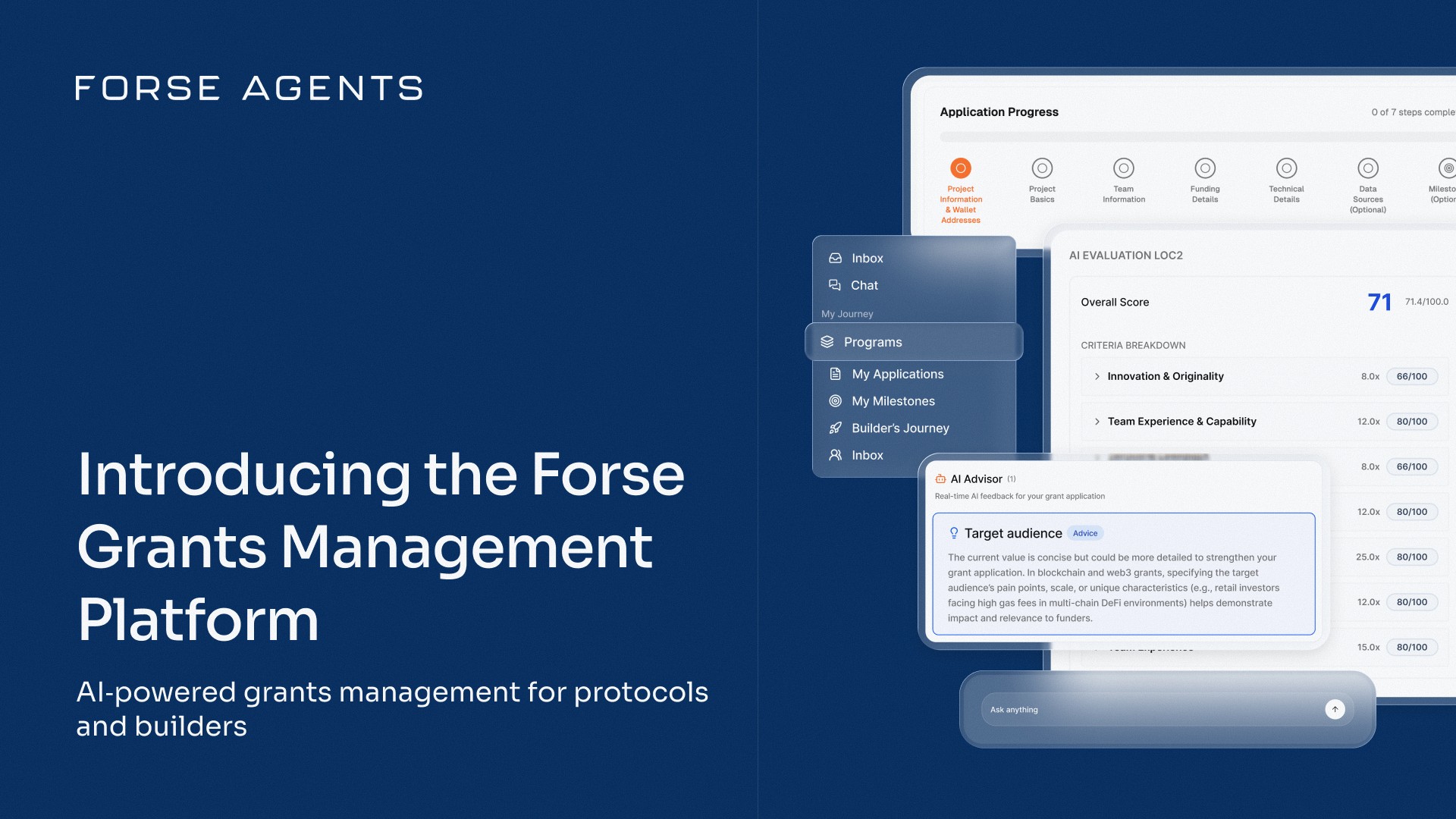

Check out the Forse STIP Dashboard

Read the Full PDF Research Report: LINK

Share with your friends: