Most crypto projects design tokens backwards: they set a supply schedule first, then hope demand shows up. Renee D is reversing that. She’s built a modeling tool that lets teams test token dynamics under real-world volatility, before launch.

Originally built for Open Matter Network (a ZK/MPC compute protocol), the tool models emissions, staking, liquidity, and user adoption using Monte Carlo simulations. The goal: make token design measurable, not speculative.

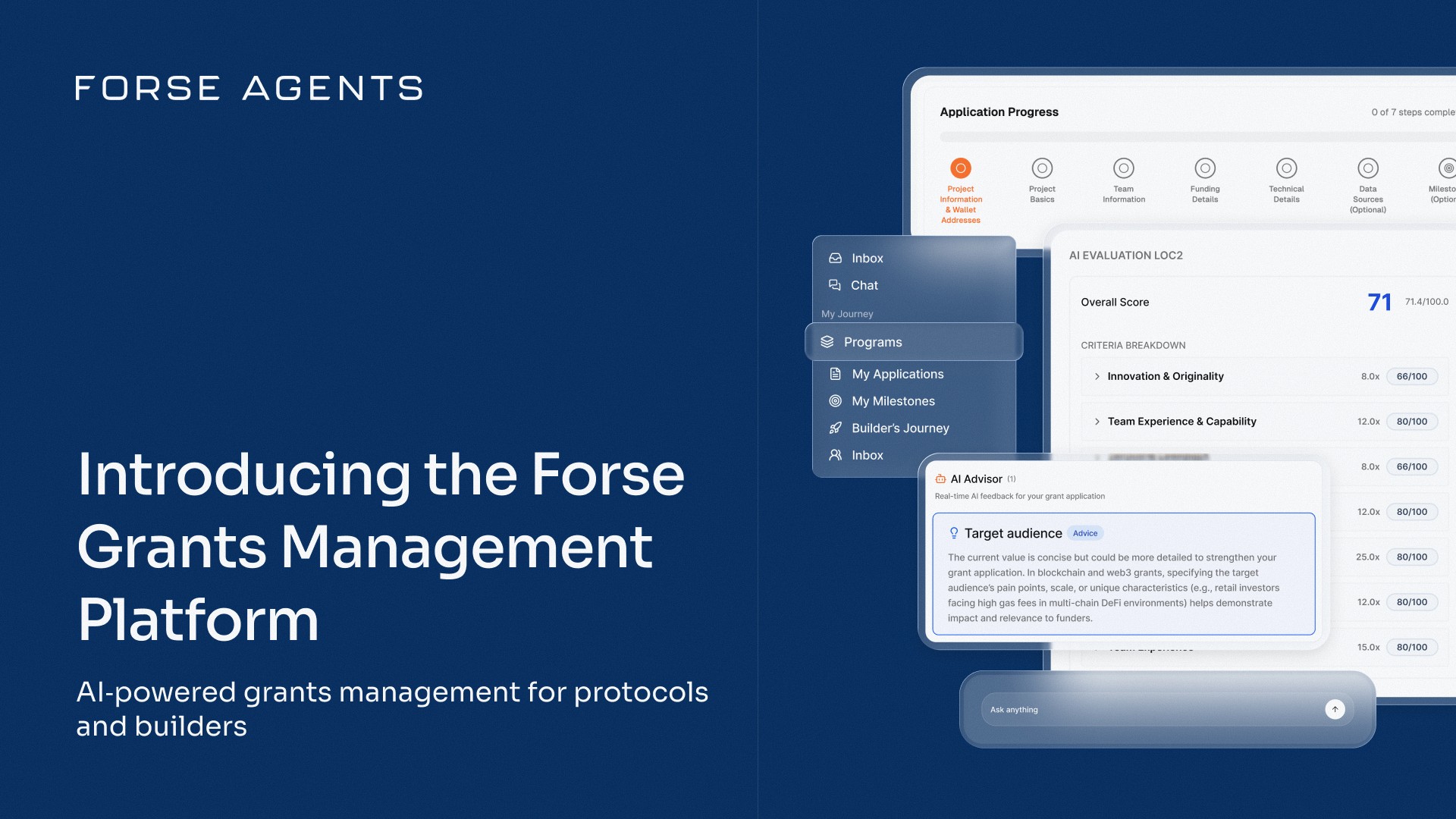

In this Interview Series, Renee walks the StableLab team through the system; how it works, why it matters, and what most teams are still missing.

Meet the Guest: Renee D.

Renee Davis has been deep in governance design for years. She founded Talent DAO, a pioneering research DAO focused on organizational science, helped launch Rare Compute Foundation, and now leads development at Open Matter Network.

Her background is in computational social science, with a focus on simulations, agent modeling, and systems thinking. After years of seeing tokenomics used as a buzzword with little rigor behind it, she decided to build something better.

Her current obsession: turning tokenomics from pitch-deck aesthetics into models you can run, stress-test, and iterate on.

From Theory to Simulation: Breaking Down the Tool

Renee’s framework isn’t a whitepaper or a spreadsheet, it’s a working simulation stack. Built in Python using Streamlit, it includes three interconnected modules designed to test token mechanics under real-world uncertainty.

Each simulation targets a different layer of token design; supply, emissions, and infrastructure cost. Together, they give protocol teams a way to pressure-test assumptions before launch and adapt to dynamic market conditions, not idealized ones.

1. Token Supply Simulator

This module models how a token behaves across time; price, supply, risk, liquidity, and adoption under probabilistic stress.

It captures:

Market cap and price trajectories

Circulating vs staked supply

Emissions, burns, and deflationary dynamics

Risk-adjusted performance via Sharpe ratio

Adoption curves and liquidity constraints

A key innovation is the dynamic emission function: token emissions increase with protocol profits, but only up to a point. After that, emissions decrease. This inverted-U curve prevents runaway inflation and rewards actual usage.

“We want to be more like Bitcoin and less like Ethereum,” Renee explained. “Emission should be responsive, not just linear.”

The model also integrates randomness into each run using Monte Carlo simulation, allowing teams to explore the distribution of possible outcomes, not just the average scenario.

Figure: Example output from Renee's Monte Carlo simulation tool, showing token price evolution, user adoption, risk-return scatter, and more. Used to pressure-test financial assumptions under real-world volatility.

2. Emission Rate Simulator

If the first simulation deals with how supply behaves, this one governs how it gets distributed and when.

It allows teams to:

Define profit-based emission schedules

Set investor and team vesting parameters

Calibrate release slopes and cliffs

Allocate emissions across categories (staking, public sale, ecosystem treasury)

Instead of fixed, time-based unlocks, emissions here are tied to actual protocol performance. This means tokens get distributed when the protocol is working, not just because a date passed.

The engine uses geometric Brownian motion, a modeling method common in traditional finance, to simulate volatility in profit projections over multi-year timeframes. It brings financial realism to token design.

“If you’re only emitting tokens when the protocol’s doing well, you’re not just reducing inflation, you’re aligning incentives with reality.”

The result: a system that adjusts over time, favors early adopters if designed that way, and helps teams visualize trade-offs between long-term sustainability and short-term momentum.

3. Gas Cost Estimator

This is the simplest module, but crucial for compute-heavy protocols. It models the cost of deploying workloads in decentralized compute environments like Open Matter Network.

It accounts for:

Resource requirements (e.g. GPU, memory, bandwidth)

Hardware pricing from node operators

Electricity costs and provider-specific fees

Estimated total cost per container deployment

Think of it as AWS pricing logic for permissionless infrastructure. It’s particularly useful for protocols running zk workloads, scientific research, or AI inference, where cost modeling isn't optional.

Together, these three modules form a composable toolkit for DAO operators, token engineers, and protocol designers who want to move beyond storytelling and into simulation.

“Too many token models assume the future,” Renee said. “We simulate it; messy, volatile, and all.”

Highlights from the Q&A

After the demo, the team jumped in with questions on competition, staking, demand, and what most models still miss.

Raph: How does a Monte Carlo simulation work under the hood?Renee: It samples from distributions—uniform or normal—to add randomness (stochastic noise) into the model. It runs simulations over time for variables like token price, market cap, Sharpe ratio, liquidity, and adoption. It helps predict possible outcomes rather than one fixed forecast.

Kene: Can the simulation factor in competition, like a new protocol entering the market?Renee: Not directly. We don't model competitors per se, but higher market volatility values can reflect a hostile or competitive environment. I haven’t yet modeled shifting market share, but it’s a great idea and something I’ll explore.

Raph: How does the model capture demand?Renee: Through demand and adoption parameters:

Max users

Adoption rate (defines S-curve steepness)

Utility factor (correlation between usage and token price)

Additionally, liquidity parameters—like price impact and market maker participation—also reflect demand indirectly.

Kene: What about staking behavior? Like incentivized lockups or panic unstakes?Renee: Currently, we model:

Staking APR

Stake rate (percentage of tokens staked)

Emission mechanics (daily or dynamic quadratic emission rate)

But we don’t yet model behavioral aspects like mass unstakes during volatility. That’s something I’d like to add via agent-based modeling.

Mel.eth: Is protocol-owned liquidity (POL) included?Renee: Not yet. We track liquidity indicators like price impact, slippage, and depth—but not the source or stickiness of that liquidity. It’s a missing piece, especially for long-term planning.

Nneoma: Any real examples of teams using this tool to pivot their tokenomics?Renee: Yes—Open Matter. Based on the simulations, we shifted from a linear emission model to one based on protocol profit. It gave us the confidence to reduce inflation while still rewarding usage.

Kene: Could this be adapted for a Layer 2 with broader ecosystem incentives, like Scroll or Arbitrum?Renee: Right now it’s protocol-level. Modeling full L2 ecosystems would require adding cross-app incentives, shared liquidity flows, even sequencer dynamics. It's doable, but much more complex.

Jose: Your view on front-loaded vs tapered emissions?Renee: I lean toward front-loaded—like Bitcoin. It rewards early adopters and builds traction. Simulations show this can drive initial momentum without oversupplying long-term. But it depends on your goal: early growth or sustained retention.

Marcos: What surprised you the most while using this tool?Renee: How sensitive everything is to demand shifts. Even small tweaks to adoption rate break the Sharpe ratio. Demand is often underestimated or oversimplified in token models. I now treat it as the core design variable.

Raph: Could Monte Carlo also be used to simulate governance systems?Renee: Absolutely. You’d need to define voter behavior, participation frequency, delegation dynamics, etc. But Monte Carlo is great for modeling noisy, multi-agent systems like governance. I’d love to build that.

Strategic Takeaways

For protocols designing tokenomics today, one thing is clear: assumptions aren’t enough. Renee’s core message landed hard during the session—“Tokenomics is design under uncertainty.” If you’re not modeling that uncertainty, you’re just hoping for the best.

This tool gives protocol teams a new way to think. Instead of starting with emissions charts and fixing the narrative after, you start with the mess: demand volatility, liquidity gaps, user behavior. You run simulations, test edge cases, and build from what survives. It’s a mindset shift.

From StableLab’s perspective, the biggest unlock is visibility. This system doesn’t spit out one “ideal” outcome, it maps the range of possible ones. It shows how emissions can track usage, not just time; how staking parameters shape user retention; and most importantly, how fragile demand really is. Many token models break not from hacks or crashes, but from quiet mismatches between design and behavior. Simulations like this surface those cracks early.

Monte Carlo doesn’t replace strategy, it sharpens it. It helps DAOs confront trade-offs before they’re live on-chain. In a space still plagued by wishful tokenomics, that’s more than helpful. It’s overdue.

For Those Interested in Learning More

Coming Up Next

In our next Interview Series, we sit down with the team behind the DeFi Education Fund to explore how advocacy, policy, and on-chain governance intersect. From regulatory strategy to grantmaking infrastructure, we’ll unpack how DAOs can defend their interests and shape the rules without losing sight of decentralization.

Share with your friends: