Every DAO hits the same wall. Too many conversations. Too many dashboards. Not enough synthesis.

Governance was meant to speed things up, yet it’s now the bottleneck, decisions trapped in endless discussion and forgotten context. AI offers a way out. Not by replacing humans, but by teaching organizations to remember and reason.

In this session, Mel and Kaf explain how to build that feedback loop: how AI can standardize reasoning, surface evidence, and turn complexity into clarity, creating what we call the augmented organization.

Meet the Guests

In this session, Mel and Kaf explore how AI turns governance from episodic decisions into a continuous learning system.

Mel, Governance Analyst at StableLab, brings two decades of experience designing control and assurance frameworks, now applied to decentralization and evidence-first reporting.

Kaf, Governance Analyst at StableLab, focuses on AI-driven evaluation models and organizational cognition, building tools that turn reasoning and verification into collective intelligence.

They reflect StableLab’s focus on evidence, accountability, and automation in DAO governance.

From Collective Intelligence to Collective Cognition

For centuries, organizations were built around human limits; slow communication, short memory, and the need for hierarchy to stay coherent. Bureaucracy preserved knowledge but slowed learning.

AI flips that logic. Instead of pushing data upward, it lets context flow sideways. Knowledge becomes shared, not siloed. The organization begins to act less like a hierarchy and more like a network of reasoning that can sense, adapt, and learn.

In this new architecture:

Memory becomes collective. Conversations and outcomes feed a knowledge base that never resets.

Coordination becomes adaptive. Agents trace dependencies and reduce duplication.

Reflection becomes structural. Dashboards evolve from tracking metrics to mapping reasoning.

Efficiency no longer means speed, it means clarity.

That’s the paradox DAOs were meant to solve. Transparency and openness brought participation, but also noise. AI alone doesn’t fix that; structure does.

Large language models can support analysis only when guided by clear rubrics and standardized reports that make reasoning comparable and verifiable. Many DAOs are now searching for that discipline, the ability to turn raw data into repeatable judgment.

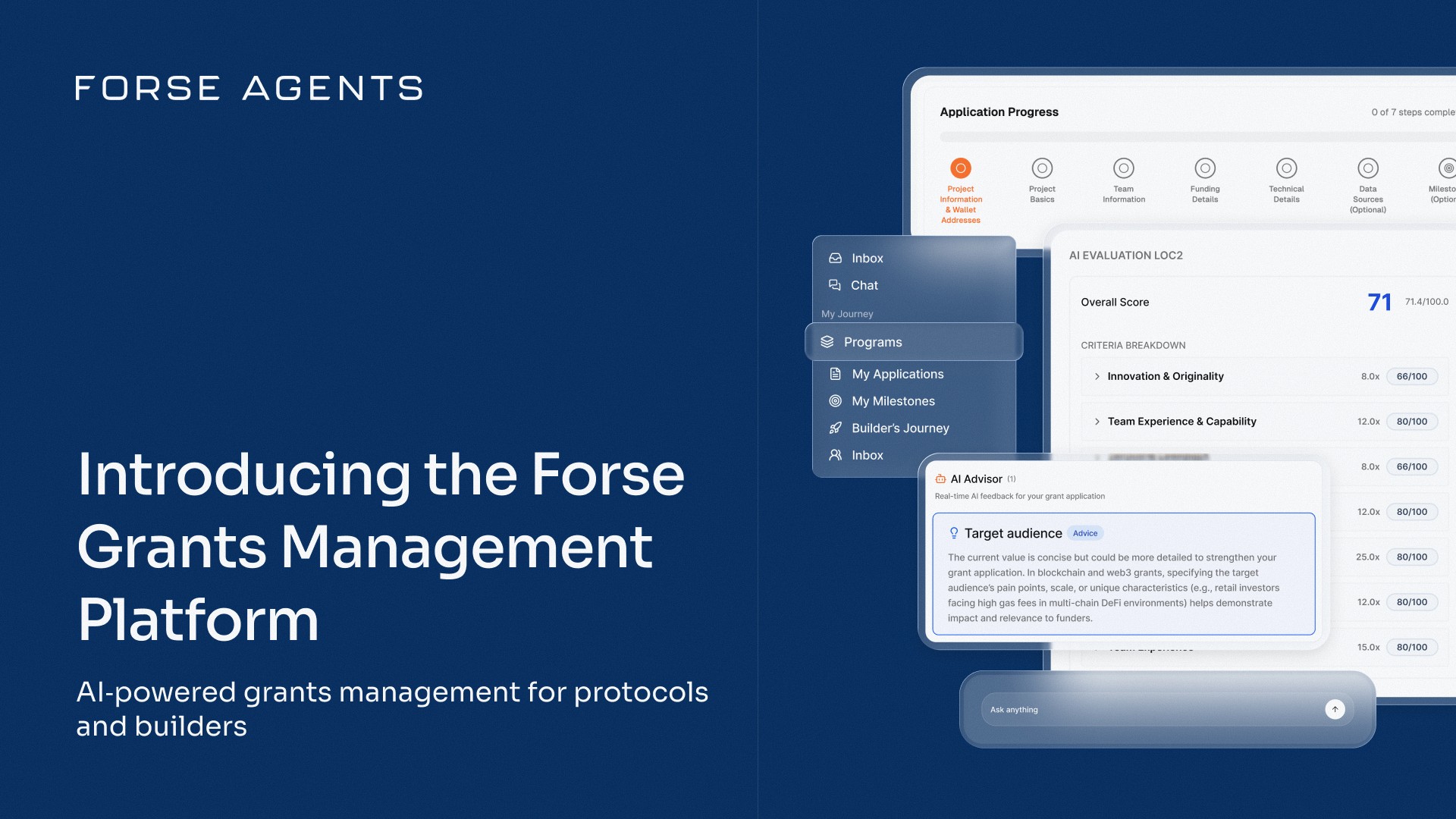

The next wave will come through AI agents for evaluation, reporting, and assurance; systems that learn from shared data and connect through common standards. Tools like Forse already point this way, turning dashboards into live intelligence layers that reveal how governance performs.

An augmented DAO doesn’t automate judgment, it enhances it, linking structure with synthesis so every decision builds on the last.

Q & A

Once the group had explored how agents are built and how decentralization metrics can be automated, the session moved into an open Q&A.

Raph: How would you define an augmented organization in one line?

Kaf: It’s a system where humans and AI share cognition. Humans provide ethics and context, AI preserves memory and pattern recognition. Together they create a feedback loop that learns as it operates.

Kene: Many worry that automation conflicts with decentralization. Can both coexist?

Mel: Yes. The key is verification. Every AI output should cite its sources and limits. When the process is auditable, automation strengthens accountability rather than concentrating power.

Nneoma: What’s the biggest misconception about AI in governance?

Kaf: That it replaces people. It doesn’t. It replaces guesswork. The advantage is validation, decisions grounded in evidence rather than influence.

Raph: Where should teams start if they want to integrate AI?

Mel: With structure. Standardize proposals, rationales, and reports. Once reasoning follows a consistent logic, AI can maintain it. Augmentation starts with clarity, not code.

Kene: Should AI sit at the edge of governance or at its core?

Kaf: At the core. AI is governance infrastructure. It belongs where context circulates, in evaluation, reporting, and reflection. That is how knowledge compounds instead of fragmenting.

Nneoma: If machines handle transparency, how do we keep trust human?

Mel: By making every output explainable. In governance, verified will always beat believed.

Raph: Efficiency has always been the holy grail of organizations. How does augmentation redefine it?

Kaf: Efficiency now means clarity. The fastest organization is not the one that moves first, it is the one that understands what it is doing while it moves.

Kene: Can AI actually help DAOs prove decentralization to external stakeholders?

Kaf: Definitely. AI can track participation, voting concentration, proposer diversity, and delegation patterns. It turns ideals into measurable and auditable metrics.

Nneoma: What are the main risks?

Mel: Over-automation erodes accountability, bias replicates human blind spots, and cultural inertia resists change. The fix is simple: keep humans in the loop and verification in the process.

Raph: And the opportunities? Kaf: Transparent governance pipelines, reflexive strategy cycles, and real time risk monitoring. Across all of them, the pattern is the same: systems that learn faster than they fail.

Kene: So what does thinking together look like in practice?

Mel: When every decision, conversation, and metric feeds back into shared memory, humans define intent, AI contextualizes it. Over time, the organization stops reacting and starts responding.

Closing Thoughts

AI isn’t replacing governance, it’s professionalizing it.

When reasoning becomes verifiable and memory persistent, decisions stop being isolated and start compounding.

The practical outcome is simple: less repetition, faster reviews, and governance that finally scales with the ecosystem’s pace.

Share with your friends: